In the ever-evolving world of technology, few names carry as much weight as NVIDIA—and once again, they’ve raised the bar. Unveiled with much anticipation, NVIDIA’s Blackwell architecture is more than just a chip; it’s a blueprint for the future of AI, data centers, and high-performance computing.

Whether you’re an AI developer, a cloud computing enthusiast, or a curious techie, here’s why the Blackwell GPU architecture is the most important silicon story of the year.

🧠 What Is the Blackwell Architecture?

Named after the renowned mathematician David Blackwell, this new architecture represents NVIDIA’s successor to the Hopper architecture, which powered game-changing AI models and deep learning systems worldwide.

Blackwell chips are built to handle the next era of AI, from billion-parameter language models to real-time inference and digital twin simulations.

💡 In short: Blackwell is designed to run AI models faster, cheaper, and with far less energy than ever before.

⚙️ Blackwell’s Technical Highlights

So what makes Blackwell so revolutionary? Let’s break it down:

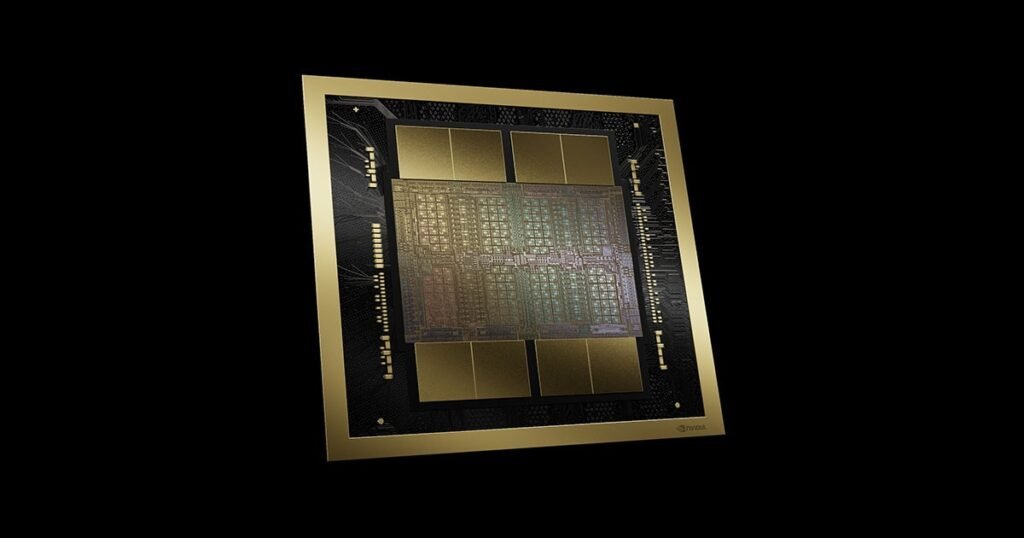

🧩 Dual-Die GPU Design

Blackwell uses a dual-die architecture, making it possible to combine two powerful dies on a single GPU. This enables:

- Significant performance increases

- Greater memory bandwidth

- Better scalability across workloads

🚀 Massive Speed Gains

According to NVIDIA, Blackwell delivers:

- 4x faster training of large language models compared to Hopper

- 30x faster inference performance

- A new level of parallel compute performance for data centers

🔌 Energy Efficiency

With increasing demand for sustainability, Blackwell cuts power consumption dramatically. Thanks to advanced NVLink, HBM3e memory, and more intelligent scheduling, it does more with less—a major win for hyperscalers and enterprise AI.

🤖 Built for the AI Age

The Blackwell chip isn’t just about speed—it’s built to handle the explosive growth of AI in every industry:

- Generative AI: Enables training of trillion-parameter models with faster iteration cycles

- Cloud Services: Powers next-gen AI tools like ChatGPT, Gemini, and Claude

- Autonomous Systems: Drives real-time decision-making in self-driving cars and robotics

- Digital Twins: Powers large-scale simulations for factories, cities, and the climate

With the rise of AI-as-a-Service platforms, Blackwell gives cloud giants like Microsoft, Google, and AWS the raw power they need to keep up.

🏢 Data Center Game-Changer

Blackwell is already making its way into NVIDIA DGX and HGX systems, along with supercomputing clusters and AI factories designed for model training at scale.

NVIDIA even teased its GB200 Grace Blackwell Superchip, which pairs the Blackwell GPU with a custom Grace CPU for the ultimate AI hardware stack.

📈 Industry Impact & Market Implications

NVIDIA isn’t just shipping chips—it’s shaping economies. The release of Blackwell has already:

- Boosted NVIDIA’s market valuation

- Accelerated AI infrastructure spending

- Set the standard for competitors like AMD and Intel to chase

🚨 Blackwell isn’t just a performance leap—it’s a technological arms race.

🧠 Final Thoughts: Why Blackwell Matters

The NVIDIA Blackwell architecture is more than just a next-gen chip. It’s a foundation for the AI-driven future, where speed, efficiency, and scale aren’t just nice to have—they’re essential.

Whether you’re training massive language models, running complex simulations, or powering AI apps in the cloud, Blackwell is the muscle behind the magic.

In the race for AI dominance, NVIDIA has once again taken the lead.